In collaboration with the Indian Mental Health Summit (IMHS), we explored some of the most pressing questions clinicians are facing today. With the rapid rise of AI in healthcare, many practitioners are asking the same thing: How do I adopt these tools responsibly while maintaining ethical standards and protecting client trust?

AI tools like ChatGPT promise to reduce hours of administrative work, support clinical workflows, and improve efficiency. Yet, alongside this excitement comes uncertainty. Where are the boundaries around ethics, confidentiality, and responsible use? How do clinicians embrace innovation without compromising professional values?

In this post, we address some of the most common and urgent questions raised by practitioners around the world, offering clarity on how AI can be integrated thoughtfully, safely, and ethically into modern mental healthcare.

Will AI Replace Therapists?

This has been one of the most common questions raised across the webinars we’ve hosted, and it deserves a thoughtful, honest discussion. The short answer is NO. But the reality comes with important nuances.

1. Accessibility

Unlike human professionals, AI is available instantly. Over the past year, it has increasingly become the first place many people turn to when they need clarity, reassurance, or guidance, often before considering speaking to another person. This shift changes how individuals begin their mental health journey, but it does not replace the depth of clinical care.

2. The Rise of AI Mental Health Coaches

Applications such as Ash, Wysa , and similar platforms position themselves as accessible alternatives or complements to therapy. At the same time, many individuals simply open tools like ChatGPT for everyday reflection or advice. This growing ecosystem creates new entry points into mental health support, but it also raises questions about boundaries, expectations, and responsible use.

3. Instant Validation

AI often reflects and validates a user’s thoughts and experiences, which can feel comforting in the moment. However, therapy is not built solely on validation. Clinical work frequently involves challenge, reflection, and structured intervention, elements that go beyond what most AI systems are designed to provide.

Given these realities, it is natural for clinicians to view AI as a potential threat. Yet, in our observations, clear patterns suggest that AI is not replacing therapists, but rather reshaping how individuals engage with care and when they choose to seek professional support. Several recurring themes highlight why AI-powered mental health products still fall short of replacing human connection.

1. The Need for Genuine Human Connection

While AI has begun replacing certain administrative roles such as front-desk support or intake assistance, many users continue to express a desire to “talk to a human” when conversations become deeply personal. Across online discussions and user feedback, a consistent concern emerges: people may experiment with AI for convenience, but they still prefer human clinicians when trust, vulnerability, and complex emotional experiences are involved.

2. Repetitive Insights

Many users report that AI conversations begin to feel circular over time. Similar problems often produce similar responses, even if phrased differently. Without deeper contextual understanding or long-term clinical judgment, the interaction can lack the evolving insight that comes from a therapeutic relationship, leaving individuals feeling as though they are not progressing meaningfully.

3. The Illusion of Progress

AI systems often provide reassurance and validation, which can feel supportive in the moment. However, consistent affirmation does not always translate into meaningful behavioral or emotional change. Without structured therapeutic goals, accountability, or clinical judgment, users may feel encouraged without actually moving forward. Over time, some individuals realize that while conversations with AI feel comforting, deeper patterns remain unchanged, highlighting the importance of human-led therapy that balances empathy with challenge and measurable progress.

Tools You Can Integrate Into Your Workflow

Below are examples of tools that offer healthcare-focused security practices and can help streamline everyday clinical tasks. As always, clinicians should verify compliance claims directly with the vendor and ensure appropriate agreements are in place before using any platform with sensitive data.

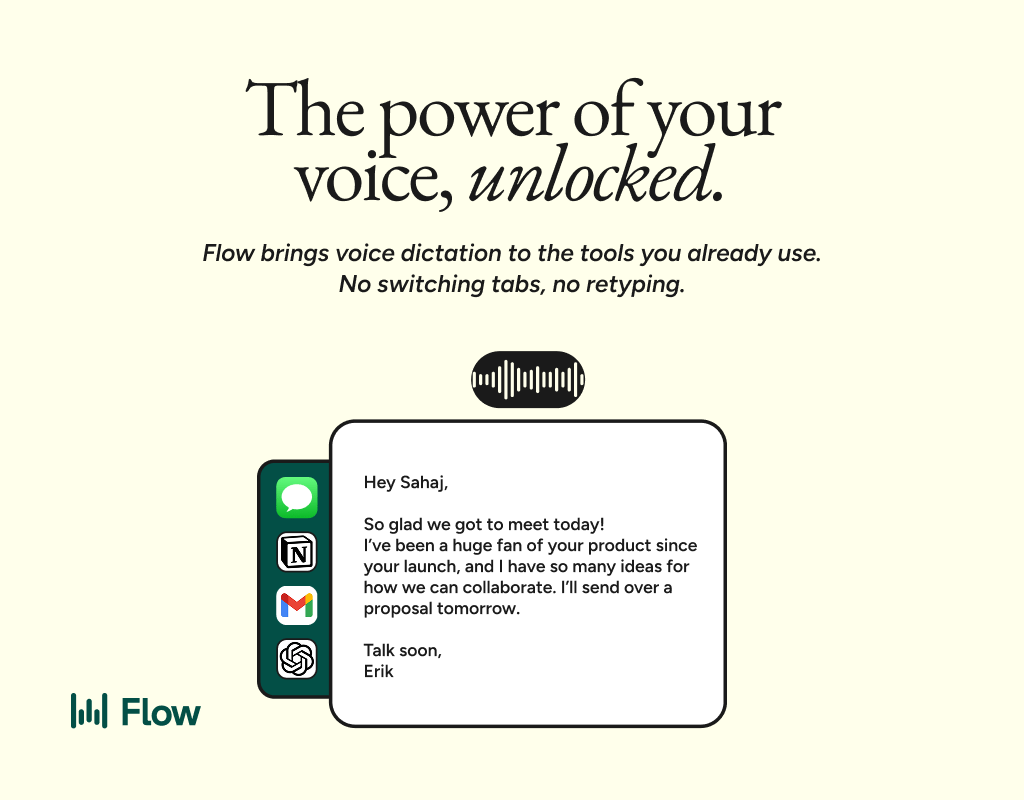

Wispr Flow

Replace manual typing with voice-based dictation. Make sure to get your signed BBA from wispr as mentioned here.

Better prompts. Better AI output.

AI gets smarter when your input is complete. Wispr Flow helps you think out loud and capture full context by voice, then turns that speech into a clean, structured prompt you can paste into ChatGPT, Claude, or any assistant. No more chopping up thoughts into typed paragraphs. Preserve constraints, examples, edge cases, and tone by speaking them once. The result is faster iteration, more precise outputs, and less time re-prompting. Try Wispr Flow for AI or see a 30-second demo.

Perplexity (For Research)

Perplexity can be a powerful research companion for clinicians who want quick access to summaries, citations, and emerging literature without spending hours navigating multiple databases. It helps surface relevant sources, condense complex topics, and speed up the early stages of evidence gathering.

For mental health professionals, this can be useful when exploring treatment frameworks, reviewing recent studies, or preparing psychoeducation material. The ability to see referenced sources also makes it easier to verify information rather than relying on generic AI responses.

That said, research tools should be used thoughtfully. Avoid entering any identifiable client information when conducting searches, and treat AI-generated summaries as a starting point rather than a final clinical reference. Always verify important claims against original sources, especially when applying insights to patient care.

Consensus (For Evidence-Based Research)

Consensus is an AI-powered research tool designed specifically around peer-reviewed academic literature. It helps clinicians quickly search and analyze findings across millions of research papers, including clinical guidelines and top medical journals, making literature reviews faster without losing scientific rigor. Features like medical-focused filtering and tools that highlight where research agrees or disagrees allow practitioners to explore evidence more efficiently while still grounding decisions in validated sources rather than generic AI summaries.

Superhuman (For Email Productivity)

Superhuman is an AI-powered email client designed to help professionals manage high volumes of communication more efficiently. Features like AI drafting, keyboard-first workflows, reminders, and follow-up tracking can reduce the time clinicians spend managing administrative emails, scheduling coordination, and professional correspondence. While it can significantly streamline daily communication, clinicians should avoid including protected health information in email workflows unless appropriate security measures and agreements are in place, and always align usage with their organization’s privacy and compliance policies.

While many tools can support clinicians today, we believe the future lies in integrated platforms built specifically for mental healthcare.

At Vybz Health, when we developed Serene, our mission was to use AI to enhance clinical workflows while keeping care fundamentally human. Every feature is built with a clinician-first mindset, ensuring that professional judgment, privacy, and ethical responsibility come before technology. Our goal is not to replace the therapeutic relationship, but to reduce administrative burden so clinicians can spend less time on repetitive tasks and more time focused on meaningful care. Behind Serene is a dedicated team of mental health professionals, advisors, and researchers working together to ensure the platform supports clinicians in delivering the highest standard of care every single day.

Things To Look Out For

If you are using an AI platform, one of the first things to understand is where the company is incorporated and which legal frameworks apply to how your data is handled. Compliance is often tied to the jurisdiction of the platform itself, not just where you or your clients are located.

HIPAA (Platforms Incorporated or Operating in the United States)

If the platform is based in the U.S. and claims to support healthcare workflows, it should offer HIPAA-aligned safeguards and be willing to sign a Business Associate Agreement (BAA). Without this, any identifiable clinical information entered into the system may not be protected under healthcare regulations, regardless of the platform’s marketing claims.

GDPR (Platforms Incorporated in the EU or Serving EU Data)

If a platform is incorporated in Europe or processes European user data, it should clearly state GDPR alignment. Look for transparency around consent, data processing purposes, retention timelines, and where servers are located. Platforms operating under GDPR typically provide clearer controls around deletion and data access.

India and Other Emerging Privacy Frameworks

If a platform is incorporated in countries like India, review how it aligns with regulations such as the Digital Personal Data Protection (DPDP) Act. Enforcement models and liability structures may differ from HIPAA or GDPR, so clinicians should verify how the company handles cross-border data transfers and whether strong privacy commitments are documented.

What This Means in Practice

Before adopting any AI tool, check:

Where the company is legally incorporated

Which compliance frameworks it explicitly follows

Whether legal agreements (like BAAs or data processing agreements) are available

Where your data is stored and processed

Compliance is not universal across AI products, and understanding jurisdictional differences is key to protecting both your workflow and your clients’ data.

Ethical AI is not about avoiding innovation, it’s about learning how to use it responsibly. The more clinicians understand privacy, bias, transparency, and accountability in AI systems, the more confidently they can integrate these tools while preserving trust, autonomy, and the human core of mental healthcare.